Learn More About EU AI Act Compliance

Get on the path to EU AI Act Compliance and start global AI compliance today

- The EU AI Act is the first major global AI regulation, requiring significant action by AI vendors as well as deployers of AI systems

- Complying with the AI Act is the best first step to a global AI compliance program

- With a nearly final draft of the Act, the time is now to start the compliance process

The tapestry of emerging supranational, national, state, and even municipal regulation of AI, in addition to a growing list of voluntary frameworks like NIST, OECD, and ISO, have created confusion. Many organizations want to comply but don’t know how to start

The EU will purposefully become the first international body to comprehensively regulate AI. By going first, they plan to set the global standard, an effect seen with the passage of GDPR and referred to as the “Brussels Effect”

Other jurisdictions, like Canada, Australia, and Switzerland will pass very similar legislation

EU compliance will provide the systems, governance, and reporting necessary for >95% of global AI regulations

EU AI Act compliance is the best place to focus AI compliance resources

The EU AI Act is a “risk-based approach” which will classify AI by both use cases (“education”) and technology (“biometrics”)

The EU AI Act will pass in April 2024 and go into effect on rolling basis starting in 6 months

Fines for noncompliance will be up to 7% of global revenue

The AI Act has obligations for developers as well as “deployers” of AI systems

The AI Act will require “high-risk” AI applications to produce extensive documentation

Required documents for vendors of high-risk systems include:

Risk Management System

Data Governance Policy

Technical Documentation

Accuracy, Robustness, and Cybersecurity Policy

Instructions and documentation for deployers (customers)

Human Oversight and Transparency Policy

Quality Management System

Fundamental Rights Assessment (in certain cases only)

Conformity Assessment and EU Declaration of Conformity

AI systems will ultimately need to be registered in an EU AI Database

Compliance Process

Proceptual Compliance Process

Proceptual compliance process moves organizations to compliance, developing the extensive reporting necessary

Registry and Risk Assessment

Create registry of Al tools in use, both internally developed and vendor-sourced

Assign risk classification to each tool

1 Month

Data Collection

Proceptual sends custom information and data request

Answers and data uploaded to our secure portal

1 Month

Create Gap Analysis and Iterate

Proceptual delivers list of missing or incomplete information

We recommend non-technical mitigation measures

1-2 Months

Produce Required Reporting

Proceptual assembles data and produces all required reports

1-2 Months

Issue Conformity Assessment

When applicable, Conformity Assessment and EU Declaration of Conformity are drafted and issued

1 Month

FAQs

The Most Common Questions

Find clear answers to common questions about our AI training, services, and support. We’re here to help—explore answers to your most frequent AI and compliance questions.

We don’t make AI systems. Does this apply?

Yes. Much like GDPR, the AI Act will apply to both vendors of AI systems and the companies that deploy those systems in the European market. Given the prevalence of AI components to common software, nearly every company will need to consider AI Act compliance.

What systems are banned as unacceptable?

A number of systems are banned by the AI Act including biometric categorization systems that use certain protected classes, scraping facial images, social scoring systems, and emotion recognition systems.

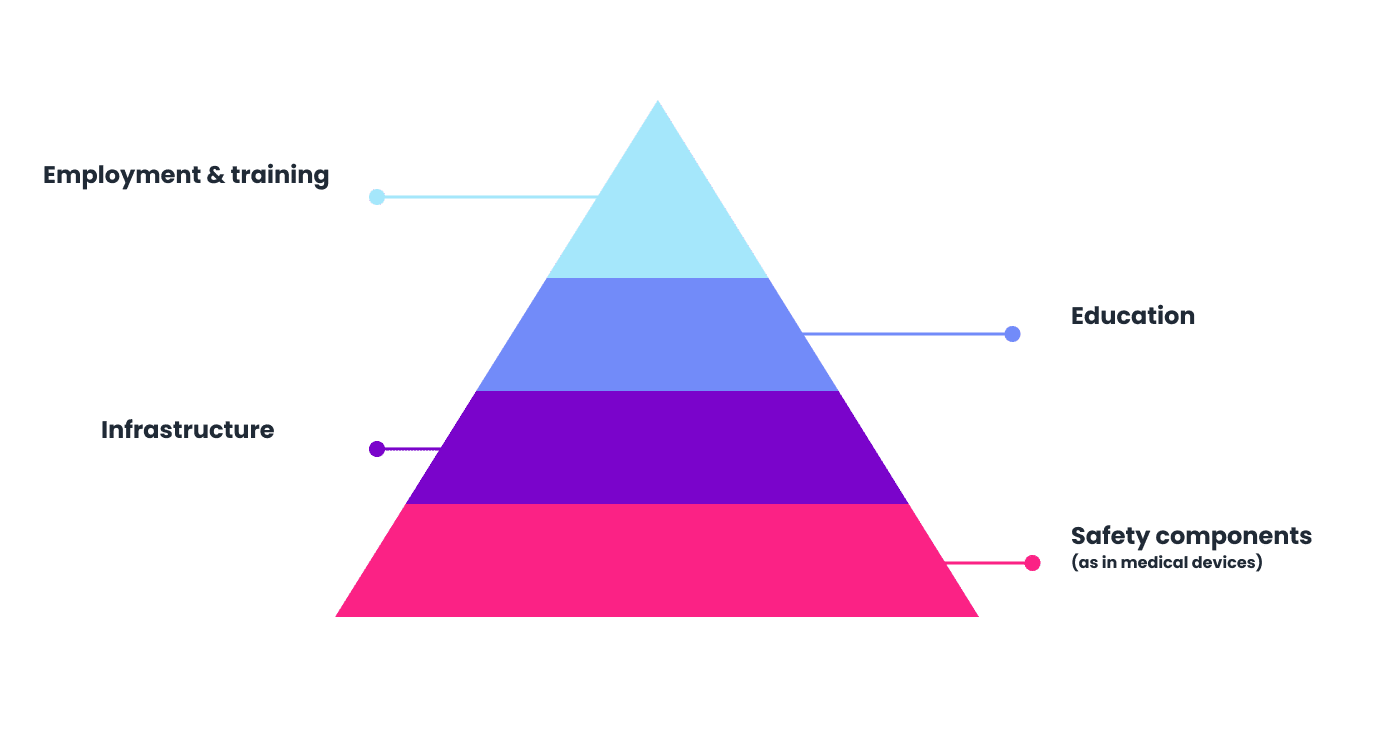

What AI systems are designated high-risk?

A wide range of systems will be labeled High Risk and require significant compliance obligations. High risk systems include (but are not limited to):

- Medical devices

- Infrastructure (for example, electricity generation)

- Systems to do with education, vocational training, and employment

When should companies start preparing for the AI Act?

Now is the right time to start preparing. While the entirety of the act will not be in force until 2026, some portions of the law, such as the ban on certain “unacceptable risk” systems, are likely to go into effect in late 2024.

What are the penalties for non-compliance?

Fines are quite substantial:

- 35 million euros or 7% of total company revenue for violations of banned applications

- 15 million euros or 3% of global revenue for violations of the act’s other obligations

- 7.5 million europe or 1.5% of total worldwide revenue for supplying incorrect information

There will be certain caps for startups and small businesses that have not yet been defined.