Learn More About Colorado AI Act Compliance

We Can Help Your Organization Get Compliant With the Colorado AI Act

- The Colorado AI Act (SB 24-205) imposes significant compliance obligations on both the developers and deployers of “high-risk” AI systems

- The law is designed to reduce “algorithmic discrimination” as a result of high-risk AI applications

- The law covers companies who do business in Colorado or who sell to Colorado consumers

- Provisions go into effect in February 2026

The tapestry of emerging supranational, national, state, and even municipal regulation of AI, in addition to a growing list of voluntary frameworks like NIST, OECD, and ISO, have created confusion.

Many organizations want to comply with new laws like the Colorado AI Act but don’t know where to start. Smart organizations will take a preemptive global perspective — putting in place the governance structures and documentation needed to comply with upcoming regulations in California, New York, the EU, Canada, Australia, and many others.

Many organizations want to comply with new laws like the Colorado AI Act but don’t know where to start. Smart organizations will take a preemptive global perspective — putting in place the governance structures and documentation needed to comply with upcoming regulations in California, New York, the EU, Canada, Australia, and many others.

Companies developing and/or using “high-risk” AI systems should take a global (rather than patchwork) approach

The Colorado AI Act classifies a number of AI use cases as “high-risk.”

Proceptual compliance process moves organizations to compliance, developing the extensive reporting necessary

Compliance Path

Compliance Process

Proceptual compliance process moves organizations to compliance, developing the extensive reporting necessary

Registry and Risk Assessment

Create registry of Al tools in use, both internally developed and vendor-sourced

Assign risk classification to each tool

1 Month

Data Collection

Proceptual sends custom information and data request

Answers and data uploaded to our secure portal

1 Month

Create Gap Analysis and Iterate

Proceptual delivers list of missing or incomplete information

We recommend non-technical mitigation measures

1-2 Months

Produce Required Reporting

Proceptual assembles data and produces all required reports

1-2 Months

FAQs

The Most Common Questions

Find clear answers to common questions about our AI training, services, and support. We’re here to help—explore answers to your most frequent AI and compliance questions.

We don’t make AI systems. Does this apply?

Yes. The Colorado AI Act will impose significant responsibiulities on the “deployers” or users of AI systems, not only on their designers.

What AI systems are designated high-risk?

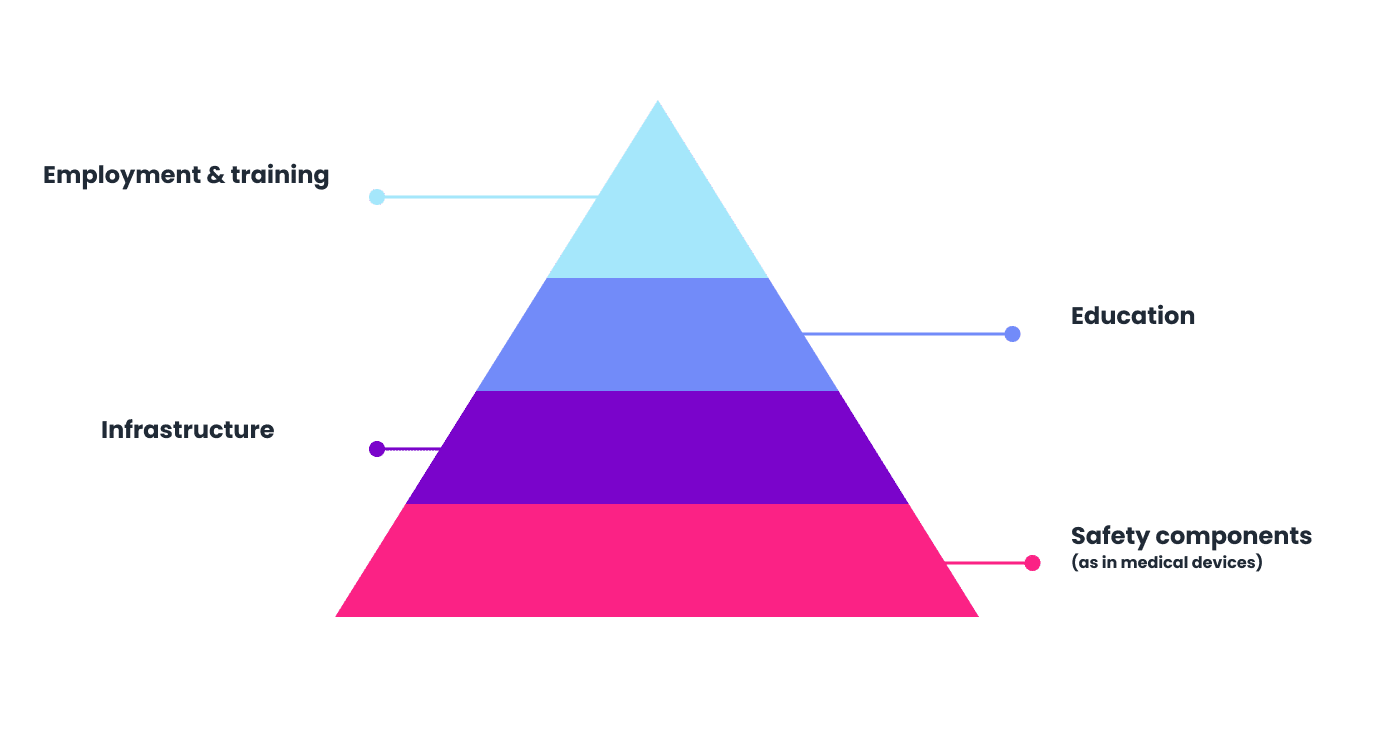

A wide range of systems will be labeled High Risk and require significant compliance obligations. High risk systems include (but are not limited to):

- Education enrollment or an education opportunity

- Employment or an employment opportunity

- A financial or lending service

- An essential government service

- Healthcare services

- Housing

- Insurance

- Legal services

When should companies start preparing for the AI Act?

Now is the right time to start preparing. While the entirety of the act will not be in force until 2026, the compliance journey requires significant high-level planning. Orgnaizations should spend at least 6 months on compliance.